|

Fine-Tuning And Customizing Llms

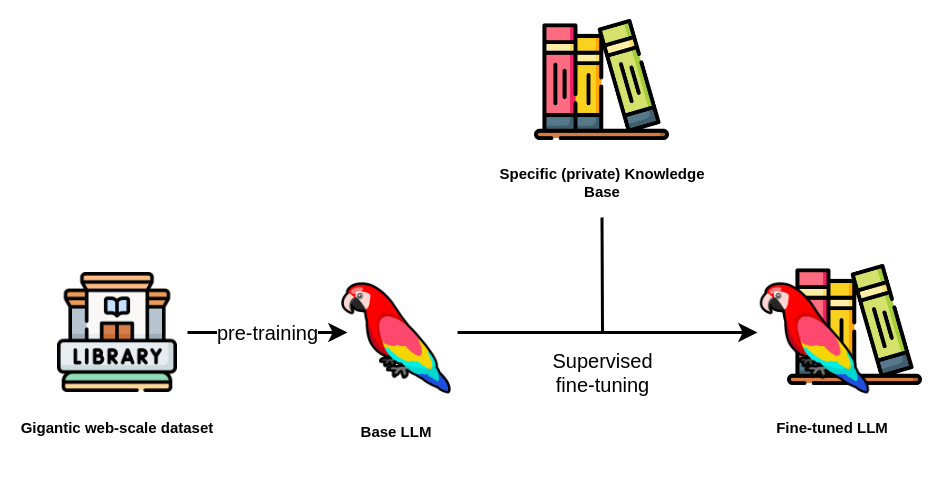

Fine-Tuning And Customizing Llms Released 1/2026 By Sandy Ludosky MP4 | Video: h264, 1280x720 | Audio: AAC, 44.1 KHz, 2 Ch Level: Intermediate | Genre: eLearning | Language: English + subtitle | Duration: 1h 4m | Size: 231 MB What you'll learn LLMs (Large Language Models) have general, limited knowledge and training data, and they may produce irrelevant, unsafe, or unhelpful outputs. In this course, Fine-tuning and Customizing LLMs, you'll learn to pre-train, fine-tune and reinforce the LLM learning to maximize their capabilities and align with human preferences and expectations. First, you'll explore the training techniques to pre-train the models with supervised fine-tuning , prompt engineering, and reinforcement learning with human feedback. Next, you'll explore core training techniques, including supervised fine-tuning, prompt engineering, and reinforcement learning with human feedback (RLHF) to adapt pre-trained models for specific tasks and performance goals. Finally, you'll discover how to evaluate and optimize model performance by applying methods like parameter-efficient fine-tuning (PEFT), transfer learning, and evaluation metrics to improve model reliability and reduce bias. When you're finished with this course, you'll have the skills and knowledge of fine-tuning and customizing LLMs that is needed to deliver accurate, context-aware, and human-aligned results across a wide range of AI-driven applications. |

| Часовой пояс GMT +3, время: 06:13. |

vBulletin® Version 3.6.8.

Copyright ©2000 - 2026, Jelsoft Enterprises Ltd.

Перевод: zCarot