Mastering Lakeflow Spark Declarative Pipelines In Databricks

Published 2/2026

Created by Fikrat Azizov

MP4 | Video: h264, 1920x1080 | Audio: AAC, 44.1 KHz, 2 Ch

Level: Expert | Genre: eLearning | Language: English | Duration: 26 Lectures ( 5h 3m ) | Size: 5.82 GB

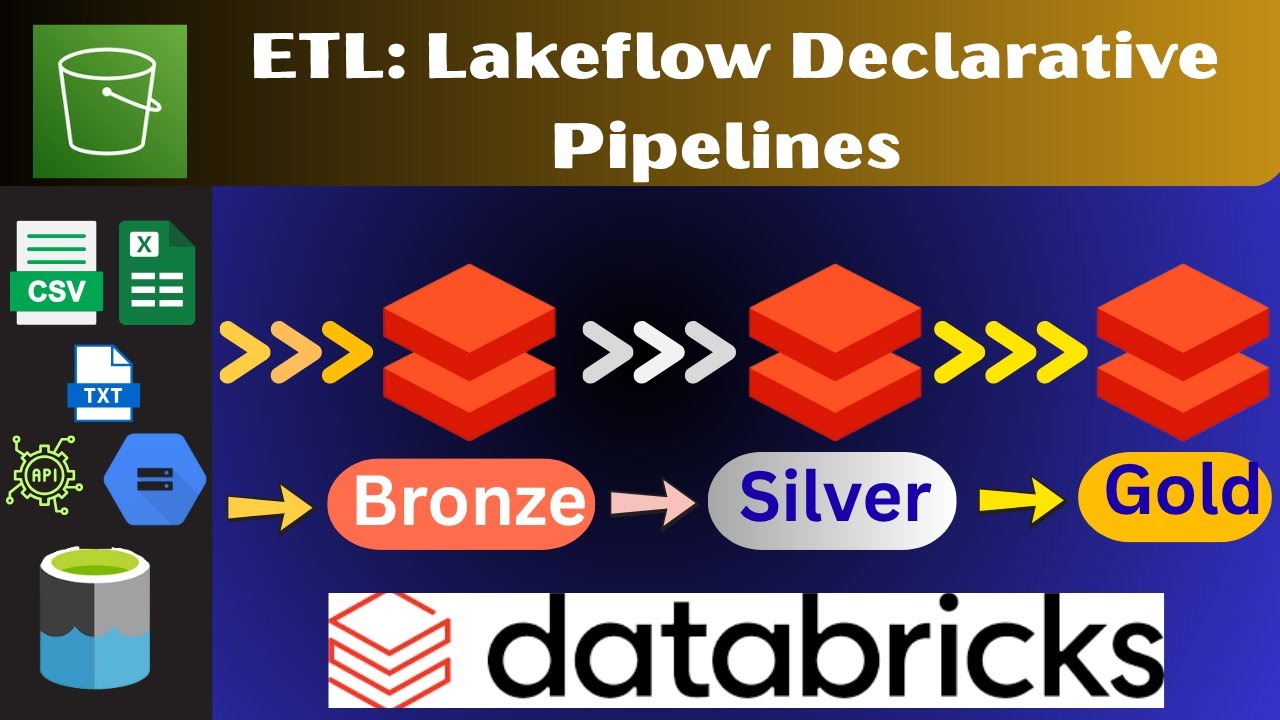

✓ Delta Architecture

✓ Overview of the Lakeflow Declarative Pipelines

✓ Understanding of data objects- streaming tables, materialized views and views

✓ Data Quality control with Lakeflow Declarative Pipelines

✓ Understanding flows

✓ Understanding flows sinks

✓ Managing Declarative Pipelines - Compute and Security

✓ Orchestration of Declarative Pipelines

✓ Monitoring Declarative Pipelines

✓ Deployment of Declarative Pipelines

Requirements

● Basics of Databricks UI navigation, PySpark and Spark SQL

Description

This course offers a practical, comprehensive guide to Lakeflow Spark Declarative Pipelines on Databricks, covering the full lifecycle from development to deployment and monitoring.

Learners are introduced to the core building blocks of Declarative Pipelines, such as streaming tables, materialized views, flows, and sinks. Limitations of streaming tables and materialized views are explained, alongside the best practices on designing these components.

The data quality control topic demonstrates the quality expectation controls, allowing for flexible data quality conditions and enforcement actions.

The flows and sinks topics explain various flow types, including Auto CDC and Snapshot flows, and demonstrate how Declarative Pipelines can write data to external systems, like Delta Lake tables, streaming services and custom destinations.

The Declarative Pipelines management topics cover compute, orchestration and security tools. Security setups are covered in detail, including user access levels, pipeline identities, and permissions required for secure operation across environments.

Monitoring topics explain events and metrics requiring monitoring, as well as various interactive and programmatic monitoring tools available in Databricks.

Deployment practices are explained through DevOps cycles, source control integration, and the use of Databricks Asset Bundles (DABs) for streamlined, YAML-based deployments.

The course includes multiple quizzes to validate students' knowledge. A capstone project provides students with an opportunity to test their knowledge by building an end-to-end Lakeflow Spark Declarative Pipeline using an open-source dataset.

The students will learn an advanced declarative pipeline development approach and prepare for real-time streaming-related topics that are part of the Databricks Data Engineering Professional exam path.

Who this course is for

■ Data engineers and architects who are interested in enhancing their knowledge on Databricks