Testing Or Evaluating Generative Ai:rag, Agentic Ai, Handson

Published 7/2025

MP4 | Video: h264, 1280x720 | Audio: AAC, 44.1 KHz, 2 Ch

Language: English | Duration: 2h 47m | Size: 1.7 GB

Mastering LLM Evaluation: Hands-on with RAG Testing, Agentic AI Testing, DeepEval, LangSmith. Learn how to test GenAI.

What you'll learn

Learn the end-to-end process of evaluating LLM applications. Quality criteria to choosing the right evaluation method, metrics for RAG, Agentic AI.

Learn Evaluation of RAG with RAGAs framework. Understand RAG and what are the component to evaluate of RAG.

Learn how to evaluate RAG with context precision, recall metrics. How to test RAG application with Python and RAGAs.

Learn how to test and evaluate RAG application with Pytest. Learn API automation of RAG application.

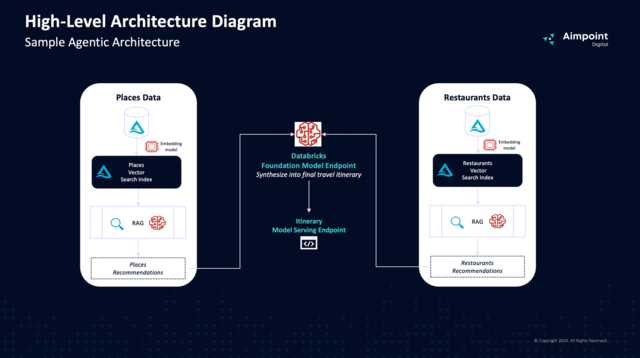

Learn how to test and evaluate Agentic AI application using DeepEval. Automation testing of Agentic AI using Pytest.

Learn how to trace RAG application using LangSmith. Create evaluation dataset using Python. Evaluate with Dataset using LangSmith.

Requirements

Very basic idea of Python and Generative AI.

Description

Evaluating Large Language Model (LLM) applications is critical to ensuring reliability, accuracy, and user trust-especially as these systems are integrated into real-world solutions. This hands-on course guides you through the complete evaluation lifecycle of LLM-based applications, with a special focus on Retrieval-Augmented Generation (RAG) and Agentic AI workflows.You'll begin by understanding the core evaluation process, exploring how to measure quality across different stages of a RAG pipeline. Dive deep into RAGAs-the community-driven evaluation framework-and learn to compute key metrics like context relevancy, faithfulness, and hallucination rate using open-source tools.Through practical labs, you'll create and automate tests with Pytest, evaluate multi-agent systems, and implement tests using DeepEval. You'll also trace and debug your LLM workflows with LangSmith, gaining visibility into each component of your RAG or Agentic AI system.By the end of the course, you'll know how to create custom evaluation datasets and validate LLM outputs against ground truth responses. Whether you're a developer, quality engineer, or AI enthusiast, this course will equip you with the practical tools and techniques needed to build trustworthy, production-ready LLM applications.No prior experience in evaluation frameworks is required-just basic Python knowledge and a curiosity to explore.Enroll and learn how to evaluate or test Gen AI application.

Who this course is for

Beginners, Testers, Quality Engineers, SDET, Data Engineers, Data Scientists, Evaluator, Gen AI developer, Students, Researchers, Project managers